On-Device AI

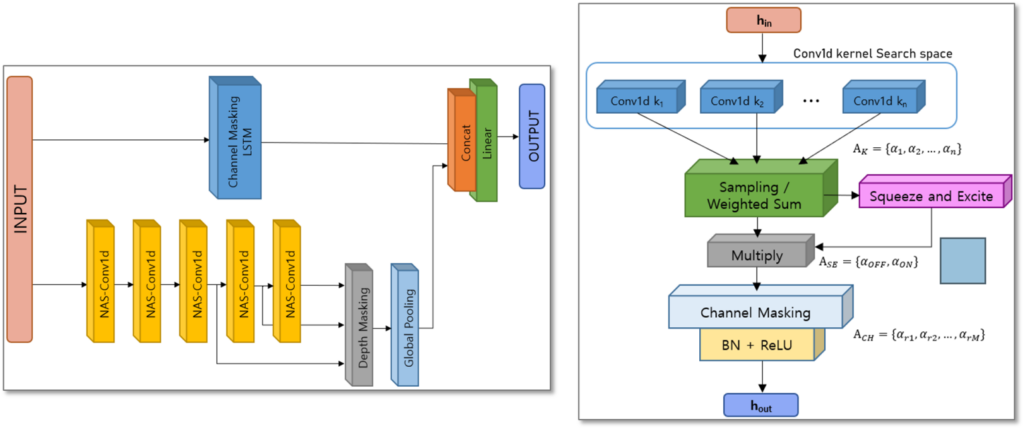

Embedded computing is a dynamic domain that harnesses the power of computer hardware and software technology across diverse sectors, including mobile, multimedia, network, automotive, and biomedical devices. Ongoing research aims to design systems that are finely tuned to meet specific requirements through advancements in computer architecture, operating systems, and compiler technology. Currently, our main emphasis is on developing customized embedded systems designed for deep learning applications in the automotive and mobile sectors. Our approach includes techniques such as quantization, pruning, knowledge distillation, and network architecture search. Additionally, we create tools for debugging and understanding deep neural network models, enabling visualization to enhance both explain-ability and the reliability of mission-critical AI systems.

Video Summarization Using Graph Neural Network

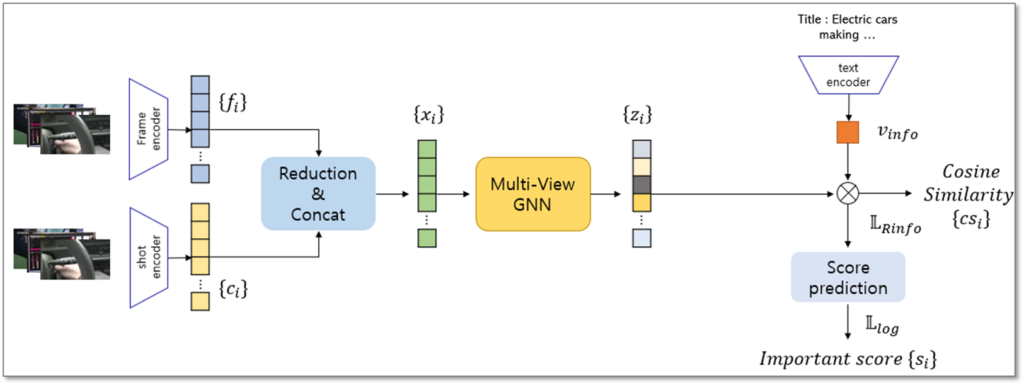

Video summarization is a challenging task that involves condensing lengthy videos, spanning several hours, into concise summary clips. Accomplishing this requires the integration of diverse techniques from image analysis, video processing, and natural language processing to effectively comprehend the video content and generate optimal summaries.

Our research concentrates on pioneering methodologies for feature extraction and summarization by harnessing the power of deep neural network models, including graph neural networks. By leveraging these advanced techniques, we aim to revolutionize video summarization, enabling more efficient and accurate summarization processes that can be applied across various domains and applications.

Memory Systems for Deep Learning and Big Data Applications

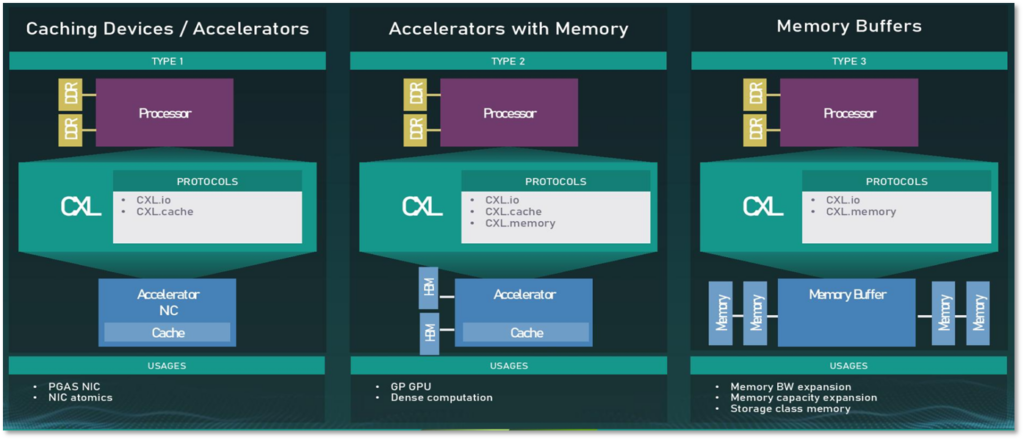

Deep learning applications demand memory systems with both high performance and high capacity to handle the intensive training and inference tasks. However, current data center servers face significant limitations in terms of memory performance and capacity. To address these challenges, there has been a surge of interest in technologies like Compute Express Link (CXL) and disaggregated memories, which offer promising solutions.

Our research is specifically focused on developing innovative methodologies for memory structures based on CXL technology. These memory structures incorporate both volatile and non-volatile memories, along with accelerators. By leveraging this combination, we aim to overcome the existing limitations and unlock the full potential of deep learning applications in data center environments. Our pursuit is to advance both software and hardware aspects to achieve efficient and powerful memory solutions for high-performance computing tasks in the realm of deep learning.